Next-gen imaging takes pictures that speak a million pixels

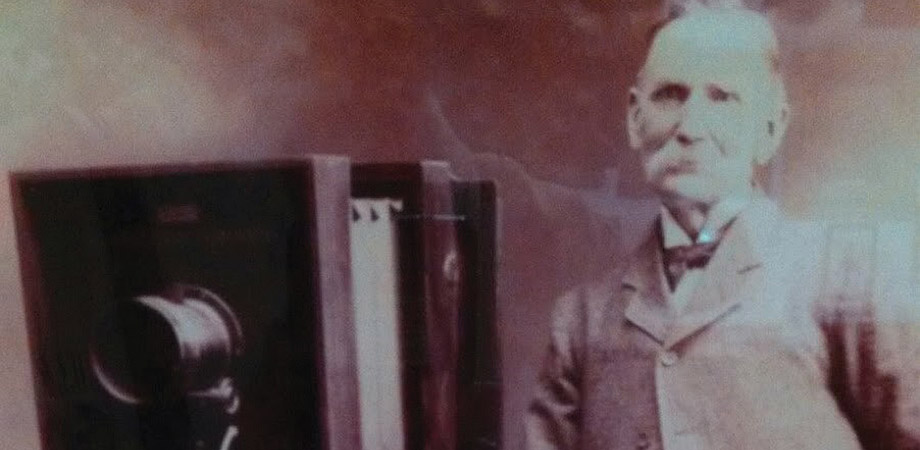

When Mads Frederik Christensen, a 19th-century photographer from Copenhagen, wanted to take pictures, he had to build his own camera, like all photographers of his day. A sepia-toned image from the time shows Christensen, with a bushy white moustache and up-turned collar, standing next to his device, a boxy affair about the size of a large microwave oven that sat atop a scaffolding equipped with castors so he could roll it around.

A century or so later, in 2012, Christensen's great-great-grandson, David Brady, built his own boxy camera, this one capable of creating the world's first gigapixel images. "It's about the same size as the cameras that were used in the 19th century," says Brady, who at the time was an electrical engineer at Duke University's Fitzpatrick Institute for Photonics. Unlike his forebear's camera, however, Brady's machine contained an array of 98 microcameras, with microprocessors that can stitch the individual images together.

In a sense, the two cameras represent the evolution of imaging. Where Christensen's relied on the methods of conventional photography, with a lens focusing light rays onto a chemically treated plate to capture a moment in time, Brady's uses the power of computing to derive data from the incoming light and select the relevant information to reconstruct a scene. Without that power, such a gigapixel camera would be impossible.

Computational imaging has been growing in popularity and sophistication over the last two decades, allowing it to overcome the limits of optical systems. Digital cameras can capture all sorts of information-not only light intensity and color, but also a variety of spectral data, such as polarization and phase-and use computational methods to extract information about a scene and recreate an image from that information.

"The camera would capture all potential optical information, and then you could go back and create images from that later," says Brady, now a professor at the University of Arizona's Wyant College of Optical Sciences. With conventional photography, the number and type of adjustments that can be made after an image is taken are limited. Computational imaging, on the other hand, allows users to refocus a photo, construct a 3D picture, combine wavelengths, or stitch together separate images into one. It can correct for aberrations, generate sharp images without lenses, and use inexpensive instruments to create photos that once would have required expensive equipment, even pushing past the diffraction limit to take pictures with resolutions beyond what a camera is theoretically capable of.

"Computational imaging does well when you're trying to do something that another camera could do, but you're trying to do it cheaper, smaller, faster," says Laura Waller, head of the Computational Imaging Lab at the University of California, Berkeley. "Computational imaging is very good when you're trying to do high-dimensional things-not just 2D imaging, but 3D or hyperspectral," which images a scene at multiple wavelengths.

The difference between conventional photography and computational imaging is in part a question of designing the optical imaging system and the image processing system to work together, taking advantage of the strengths of both, Waller says. In regular imaging, the picture captured is, more or less, the result. Tweaking the image in software-to fix the contrast, for instance-does not make it computational imaging. In computational imaging, the whole system is designed to get a particular result, and what the camera captures is just a step along the way that may not look at all like the final image. It may, in fact, just be a blur.

For instance, her lab developed DiffuserCam, a system capable of creating 3D images without the use of a lens. It consists of a bumpy piece of plastic-something as simple as a piece of Scotch tape would do-placed over an image sensor. In a camera with a lens, a point in a scene maps to a point on the sensor. In the DiffuserCam, the plastic diffuser encodes a pattern of varying intensities for every point in the scene. The computer then decodes the patterns to recreate the scene. This makes for a lightweight, inexpensive system not limited by the focusing abilities of a lens. In a 2017 paper, Waller's team used the system to reconstruct 100 million 3D voxels from a 1.3-megapixel image of a plant's leaves.

Such a system could be useful to perform fast processing for vision systems in autonomous vehicles, for instance. It also provides a way to do imaging at challenging wavelengths for lensed systems. "X-ray lenses are terrible and they're very expensive and they waste a lot of light," Waller says. "Electron microscopy lenses are even more difficult." In fact, her group is teaming up with x-ray and electron microscopy specialists at Lawrence Berkeley National Laboratory to develop computational imaging systems for their studies.

In a different approach, Waller's group can achieve resolutions beyond the diffraction limit of a microscope objective by taking several images of the same object from different angles and using the computer to stitch the images together. They've replaced the light source in a microscope with an array of LEDs, and by turning on specific LEDs at different times they can illuminate the object from various angles. That produces high-resolution images across a large field of view, avoiding a trade-off between size and sharpness. Focusing at multiple depths provides large, high-resolution 3D images that can be used for biological applications, from the high-throughput screening of blood samples to in vivo studies of activity inside the brains of mice.

One intriguing use of data is to take an image from one instrument and transform it into an image that could have been taken by a different piece of equipment. Aydogan Ozcan of the University of California, Los Angeles, uses neural networks to accomplish that transformation. A holographic microscope, for instance, is good at capturing a 3D image of a sample in a single snapshot. But that sample is monochrome and can suffer from problems with contrast. A bright-field microscope, on the other hand, captures a broad range of wavelengths, but requires time-consuming scanning to image an entire sample. Ozcan wanted to make images with the advantages of both techniques.

To accomplish that, he took images of pollen generated by both a holographic and a bright-field microscope and fed them to a neural network, which figured out the relationship between the two types of images. Once the network had developed a model of how the two were related, it could take a holographic image and show how it would look if it had been captured by a bright-field microscope. "You can have the best of both worlds by setting this data-driven image transformation," Ozcan says. Users can take a holographic snapshot, and "it's as if those images are coming from a bright-field microscope without the bright-field three-dimensional scanning."

Neural networks are a hot area in artificial intelligence. Built on a rough analogy to a living brain, they process data through layers of statistically weighted artificial neurons to discover patterns a human wouldn't see. Ozcan uses the same approach, which he calls cross-modality learning, to essentially turn a less expensive microscope with resolution too limited to see nanoscale features into an expensive one that pushes past that limit.

Credit: Aydogan Ozcan, UCLA

UCLA owns a microscope that uses a technique called stimulated emission depletion to achieve super resolution, higher than the diffraction limit. Ozcan estimates the instrument cost more than $1 million when it was purchased about a decade ago. He has the neural net compare images from that instrument with others from a confocal microscope, which might cost between US $200,000 and $400,000. "We have trained that neural net to basically boost up its resolution to mimic a more expensive microscope," he says.

A similar trick could aid in biopsies, which rely on images of tissue samples stained with various contrast agents. Staining a tissue can be time consuming, and if a pathologist wants to look at a different stain, that requires starting again with a different bit of tissue. Ozcan uses computational imaging to create virtual stains. First, he trained a neural network to look at the autofluorescence from unstained samples and relate them to images of stained samples, essentially transforming a fluorescence microscope into a bright-field microscope. Then he had the computer compare images of samples treated with different stains. Eventually what he wound up with was a way to take an unstained tissue sample and turn it into images of any stain he wanted. He showed a panel of diagnosticians images of samples stained by pathologists and those created virtually, and they could not tell the difference.

One particular stain of kidney tissue is exotic and expensive, Ozcan says, and can take a person about three hours to create. Creating the stained image computationally takes only a minute or two. Ozcan and one of this colleagues, Yair Rivenson, have co-founded a company, Pictor Labs, to commercialize their virtual staining techniques.

Microscopy is especially suitable for computational imaging, Ozcan says, because microscopes generate a lot of image data quickly, without variations in lighting and angles that might be more difficult for the computer to sort out. It's possible to generate, over the course of several days, a few hundred thousand pairs of images for the neural network to train on.

That's not to say the technology is limited to microscopy. Just about any imaging technology could benefit from computational enhancement. In virtual and augmented reality, for instance, researchers are still striving to make high-quality, lightweight optics that can be worn close to the eye and don't suffer from chromatic aberrations while projecting desired images. "That's certainly not a solved problem yet," Waller says.

Even consumer applications are benefiting. "If you look at cell phone cameras these days, they're full of computational imaging," she says. The Google Pixel phone, for instance, has more than one pixel under each microlens to provide the camera with directional information, which in turn allows it to measure depth. "They can do all these neat things, like making your background more blurred or finding your head so they can put a fake hat on you," Waller says. "They're trying to go for extra information or trying to make the camera small and cheap, which is something computational imaging is good for."

It might seem that the benefit of having smaller, cheaper optics would be offset by the cost of added computing, but because computing technology has improved so much faster than camera technology, that's not the case. Since their invention, digital cameras have increased from 1 to 10 megapixels, Brady says. "It's improved by a factor of 10 over a time period where memory got like a million times cheaper and communication bandwidth got a million times faster." The data produced by cameras with lens arrays capturing multispectral images that last several seconds is massive-as much as 10 terabytes a day, in some cases-but neural processors based on machine learning algorithms can handle that, he says.

That kind of computing power can overcome some of the limits of optics. "It's just extremely difficult to make a single lens that can resolve more than 10 megapixels for physical objects," Brady says. "But it's also completely unnecessary. When you go to these parallel processing solutions, you change the structure of the optical design and then you can get to really arbitrarily large pixel counts."

Brady is also now chief scientist at Aqueti, a company he founded based on technology licensed from his former lab at Duke University. The company makes security cameras outfitted with an array of lenses to capture high-resolution video of large areas, then allows users to move around the scene and zoom in on any area within the field of view, without any movement of the camera. A video on the company's website shows a wide-angle view of a couple of city blocks, then zooms in on construction workers on a roof, or focuses on a distant clock tower.

He imagines all sorts of new uses for technology with such capabilities. "Let's say you're getting married. You set up the device at the wedding. It captures all aspects of the wedding and allows you to go back to relive it in virtual reality. Why wouldn't you want to do that?"

It's difficult to say what all the applications for computational imaging might be, Brady says. "The kinds of things you can see, the kind of resolution that you have is very unprecedented. Insanely high resolution really," he says, on the order of being able to focus down to a millimeter from a kilometer away. "We're in a phase where a new medium has emerged, but it's still in the control of the technologists. As it gets used by artists and photographers, who knows what's going to happen?"

Neil Savage writes about science and technology in Lowell, Massachusetts.

| Enjoy this article? Get similar news in your inbox |

|