Toward standardized tests for assessing lidars in autonomous vehicles

Today, autonomous vehicles (AVs) and advanced driver assistance systems (ADASs) are rapidly growing research directions aimed at increasing vehicle and road safety. Both technologies minimize human error by enabling cars to “perceive” their surroundings and act accordingly. This is achieved using light detection and ranging (lidar) technology, one of the most important and versatile components in AVs. Lidars provide a three-dimensional map of all objects around the vehicle regardless of external lighting conditions. This map, updated hundreds of times per second, can be used to estimate the position of the vehicle relative to its surroundings in real time.

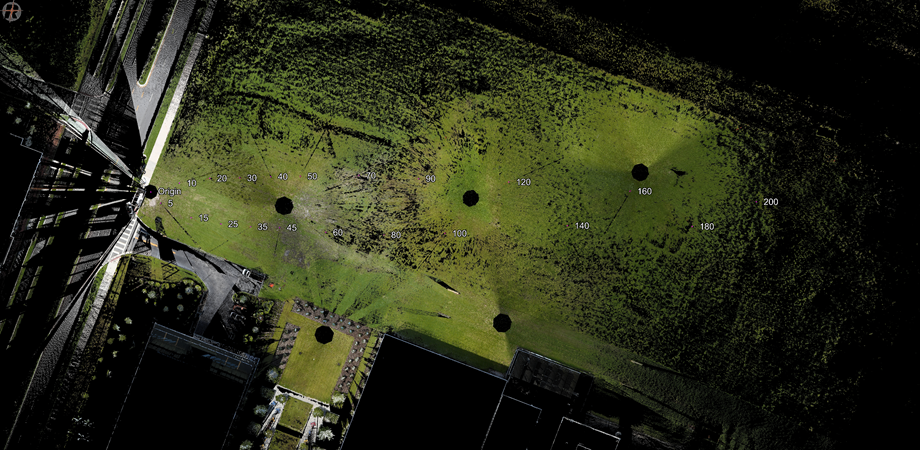

Reflective road signs were placed near the targets to measure the precision and range of automotive lidars under more challenging conditions. Credit: The Authors, doi 10.1117/1.OE.62.3.031211

Despite their crucial role in both AVs and ADAS, however, lidars currently lack a standardized measure for describing their performance. In other words, there is no widely accepted protocol for comparing one lidar with another. Although one could arguably compare lidars based solely on their manufacturers' specifications, such comparisons are not very useful. This is because the performance metrics used by the manufacturers vary and are typically confidential. Moreover, unlike lidars used for science, surveyance, or defense applications, automotive-grade lidars are optimized for manufacturability, cost, and size. This is likely to lead to marked variations in performance that would be impossible to quantify without standardized tests.

To tackle this problem, Dr. Paul McManamon of Exciting Technology formed a national group in conjunction with SPIE to address the issue with a three-year effort to develop tests and performance standards for lidars used in AVs and ADAS. The tests during the first year were led by Dr. Jeremy P. Bos, an associate professor at Michigan Technological University (MTU), with assistance from his PhD student, Zach Jeffries. Other authors included Charles Kershner from the National Geospatial-Intelligence Agency, who set up a ground truth Reigl lidar for the test, and Akhil Kurup, also of MTU. In a paper published recently in Optical Engineering, the team reports the findings of the first-year tests and a briefing outline of the larger three-year plan.

The objective of these tests was to evaluate the range, accuracy, and precision of eight automotive-grade lidars using a survey-grade lidar as a reference. Bos, Jeffries, and the team set up various targets along a 200-meter path in an open field in Kissimmee, Florida. One key aspect of these targets that made the tests stand out from previous studies was that they were near-perfect matte surfaces with a calibrated 10% reflectivity across a wide spectrum. The researchers also measured the ability of the lidars to detect the target among highly reflective road signs.

The tests results were, in general, consistent with the values advertised in the manufacturer’s datasheets. However, despite recording a mean precision of 2.9 cm across all the tested devices, the distribution of the measured values was not Gaussian. Simply put, there was a non-negligible probability for these devices to report very imprecise values (error greater than 10 cm). In fact, in some cases, the measured range deviated from the real value by as much as 20 cm. Another important result was that the reflective road signs impaired the target detection performance of the lidars. “The advertised range performance of lidars pertains to very specific conditions, and performance degrades significantly in the presence of a highly reflective adjacent object,” said Bos.

Overall, the first round of tests provided important insights into the performance differences between different lidars, suggesting that the metrics reported by their respective manufacturers are not reliable. Still, Bos emphasizes this is only the beginning. “The first-year tests were the simplest of them. In the second year, we will duplicate these tests for the characterized lidars while introducing confusion resulting from other automotive lidars approaching from the opposite direction. Additionally, we will measure the eye safety of the lidars,” said Bos. “Finally, in the third year, we will include weather effects as a culmination of the complexity build-up.”

The team’s efforts will help decisionmakers, engineers, and manufacturers realize the importance of lidar standardization and ultimately make our roads safer.

Read the open access paper by Jeffries et al., “Towards open benchmark tests for automotive lidars, year 1: static range error, accuracy, and precision” Opt. Eng. 62(3) 031211 (2023). doi: 10.1117/1.OE.62.3.031211

| Enjoy this article? Get similar news in your inbox |

|