New Design Advances Optical Neural Networks that Compute at the Speed of Light Using Engineered Matter

Diffractive deep neural network is an optical machine-learning framework that uses diffractive surfaces and engineered matter to all-optically perform computation. After its design and training in a computer using machine learning, each network is physically fabricated, using, for example, 3D printing or lithography, to engineer the trained network model into matter. This 3D structure of engineered matter is composed of transmissive and/or reflective surfaces that together perform machine-learning tasks through light-matter interaction and optical diffraction, at the speed of light, and without the need for any power, except for the light that illuminates the input object. This ability is especially significant for recognizing target objects more quickly and with significantly less power than standard computer-based machine learning systems-and it might provide major advantages for autonomous vehicles and various defense related applications, among others.

Introduced by UCLA researchers, this framework was experimentally validated for object classification and imaging, providing a scalable and energy efficient optical computation framework. In subsequent research, UCLA engineers further improved the inference performance of diffractive optical neural networks by integrating them with standard digital deep neural networks, forming hybrid machine-learning models that perform computation, partially using light diffraction through matter, and partially using a computer.

In their latest work, published in Advanced Photonics, an open access journal co-published by SPIE and Chinese Laser Press, the UCLA group has taken full advantage of the inherent parallelization capability of optics and significantly improved the inference and generalization performance of diffractive optical neural networks, helping to close the gap between all-optical and the standard electronic neural networks.

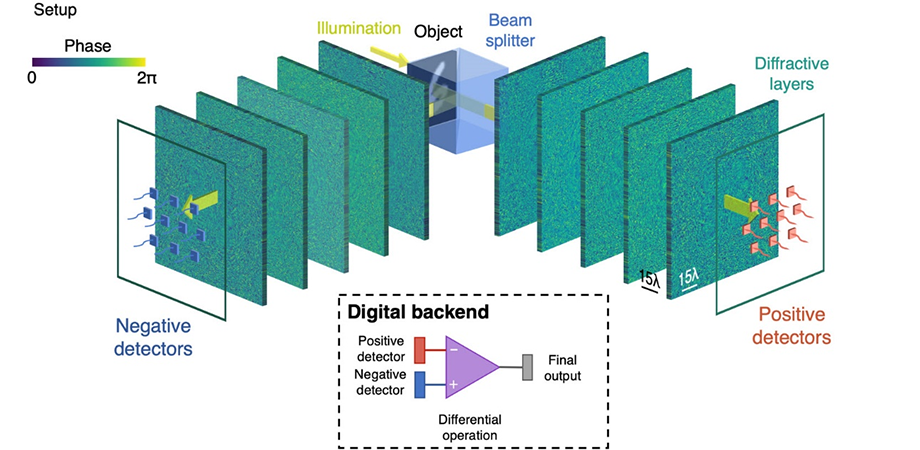

One of the key improvements incorporated a differential detection scheme, where each class score at the optical network's output plane is calculated using two different detectors, one representing positive numbers and the other representing negative numbers. The correct object class (for example, cars, airplanes, ships, etc.) is inferred by the detector pair that has the largest normalized difference between the positive and the negative detectors. This differential detection scheme is also combined with parallel-running diffractive optical networks, where each one is specialized to specifically recognize a subgroup of object classes. This class-specific diffractive network design significantly benefits from the parallelism and the scalability of optical systems, forming parallel light paths within 3D engineered matter to separately compute the class scores of different types of objects.

Operation principles of a differential diffractive optical neural network. Since diffractive optical neural networks operate using coherent illumination, phase and/or amplitude channels of the input plane can be used to represent information.

These new design strategies achieved unprecedented levels of inference accuracy for all-optical neural-network-based machine learning. For example, in one implementation UCLA researchers numerically demonstrated blind testing accuracies of 98.59%, 91.06%, and 51.44% for the recognition of the images of hand-written digits, fashion products, and CIFAR-10 grayscale image dataset (composed of airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks), respectively. For comparison, these inference results come close to the performance of some of the earlier generations of all-electronic deep neural networks, for example, LeNet, which achieves classification accuracies of 98.77%, 90.27%, and 55.21% corresponding to the same datasets, respectively.

More recent electronic neural network designs, such as ResNet, achieve much better performance, still leaving a gap between the performances of all-optical and electronic neural networks. This gap, however, is balanced by important advantages provided by all-optical neural networks, such as the inference speed, scalability, parallelism, and the low-power requirement of passive optical networks that utilize engineered matter to compute through diffraction of light.

This research, supported by the Koç Group (Turkey), the National Science Foundation (U.S.), and the Howard Hughes Medical Institute (U.S.), was led by Dr. Aydogan Ozcan who is a Chancellor's Professor at the Electrical and Computer Engineering Department of UCLA, and an associate director of the California NanoSystems Institute (CNSI). The other authors of this work are graduate students Jingxi Li, Deniz Mengu, and Yi Luo, as well as Dr. Yair Rivenson, an adjunct professor of electrical and computer engineering at UCLA.

"Our results provide a major advancement to bring optical neural network-based low power and low-latency solutions for various machine-learning applications," said Ozcan. Moreover, these systematic advances in diffractive optical network designs may bring us a step closer to the development of next-generation, task-specific, and intelligent computational camera systems.

Read the original research article in the open-access journal Advanced Photonics. J. Li, D. Mengu, Y. Rivenson, and A. Ozcan, "Class-specific differential detection in diffractive optical neural networks improves inference accuracy," Adv. Photon. 1(4), 046001 (2019).

Related SPIE content:

Diffractive deep neural networks for all-optical machine learning (Conference Presentation)

Mission Possible: Deep neural nets transform diagnostic imaging

| Enjoy this article? Get similar news in your inbox |

|